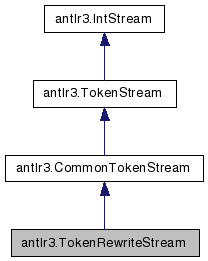

antlr3.TokenRewriteStream Class Reference

CommonTokenStream that can be modified. More...

Public Member Functions | |

| def | __init__ |

| def | rollback |

| Rollback the instruction stream for a program so that the indicated instruction (via instructionIndex) is no longer in the stream. | |

| def | deleteProgram |

| Reset the program so that no instructions exist. | |

| def | insertAfter |

| def | insertBefore |

| def | replace |

| def | delete |

| def | getLastRewriteTokenIndex |

| def | setLastRewriteTokenIndex |

| def | getProgram |

| def | initializeProgram |

| def | toOriginalString |

| def | toString |

| def | reduceToSingleOperationPerIndex |

| We need to combine operations and report invalid operations (like overlapping replaces that are not completed nested). | |

| def | catOpText |

| def | getKindOfOps |

| def | toDebugString |

Public Attributes | |

| programs | |

| lastRewriteTokenIndexes | |

Static Public Attributes | |

| string | DEFAULT_PROGRAM_NAME = "default" |

| int | MIN_TOKEN_INDEX = 0 |

Static Private Attributes | |

| __str__ = toString | |

Detailed Description

CommonTokenStream that can be modified.Useful for dumping out the input stream after doing some augmentation or other manipulations.

You can insert stuff, replace, and delete chunks. Note that the operations are done lazily--only if you convert the buffer to a String. This is very efficient because you are not moving data around all the time. As the buffer of tokens is converted to strings, the toString() method(s) check to see if there is an operation at the current index. If so, the operation is done and then normal String rendering continues on the buffer. This is like having multiple Turing machine instruction streams (programs) operating on a single input tape. :)

Since the operations are done lazily at toString-time, operations do not screw up the token index values. That is, an insert operation at token index i does not change the index values for tokens i+1..n-1.

Because operations never actually alter the buffer, you may always get the original token stream back without undoing anything. Since the instructions are queued up, you can easily simulate transactions and roll back any changes if there is an error just by removing instructions. For example,

CharStream input = new ANTLRFileStream("input"); TLexer lex = new TLexer(input); TokenRewriteStream tokens = new TokenRewriteStream(lex); T parser = new T(tokens); parser.startRule();

Then in the rules, you can execute Token t,u; ... input.insertAfter(t, "text to put after t");} input.insertAfter(u, "text after u");} System.out.println(tokens.toString());

Actually, you have to cast the 'input' to a TokenRewriteStream. :(

You can also have multiple "instruction streams" and get multiple rewrites from a single pass over the input. Just name the instruction streams and use that name again when printing the buffer. This could be useful for generating a C file and also its header file--all from the same buffer:

tokens.insertAfter("pass1", t, "text to put after t");} tokens.insertAfter("pass2", u, "text after u");} System.out.println(tokens.toString("pass1")); System.out.println(tokens.toString("pass2"));

If you don't use named rewrite streams, a "default" stream is used as the first example shows.

Definition at line 2025 of file antlr3.py.

Member Function Documentation

| def antlr3.TokenRewriteStream.__init__ | ( | self, | ||

tokenSource = None, |

||||

channel = DEFAULT_CHANNEL | ||||

| ) |

- Parameters:

-

tokenSource A TokenSource instance (usually a Lexer) to pull the tokens from. channel Skip tokens on any channel but this one; this is how we skip whitespace...

Reimplemented from antlr3.CommonTokenStream.

| def antlr3.TokenRewriteStream.rollback | ( | self, | ||

| args | ||||

| ) |

| def antlr3.TokenRewriteStream.deleteProgram | ( | self, | ||

programName = DEFAULT_PROGRAM_NAME | ||||

| ) |

| def antlr3.TokenRewriteStream.insertBefore | ( | self, | ||

| args | ||||

| ) |

| def antlr3.TokenRewriteStream.getLastRewriteTokenIndex | ( | self, | ||

programName = DEFAULT_PROGRAM_NAME | ||||

| ) |

| def antlr3.TokenRewriteStream.setLastRewriteTokenIndex | ( | self, | ||

| programName, | ||||

| i | ||||

| ) |

| def antlr3.TokenRewriteStream.initializeProgram | ( | self, | ||

| name | ||||

| ) |

| def antlr3.TokenRewriteStream.toOriginalString | ( | self, | ||

start = None, |

||||

end = None | ||||

| ) |

| def antlr3.TokenRewriteStream.reduceToSingleOperationPerIndex | ( | self, | ||

| rewrites | ||||

| ) |

We need to combine operations and report invalid operations (like overlapping replaces that are not completed nested).

Inserts to same index need to be combined etc... Here are the cases:

I.i.u I.j.v leave alone, nonoverlapping I.i.u I.i.v combine: Iivu

R.i-j.u R.x-y.v | i-j in x-y delete first R R.i-j.u R.i-j.v delete first R R.i-j.u R.x-y.v | x-y in i-j ERROR R.i-j.u R.x-y.v | boundaries overlap ERROR

I.i.u R.x-y.v | i in x-y delete I I.i.u R.x-y.v | i not in x-y leave alone, nonoverlapping R.x-y.v I.i.u | i in x-y ERROR R.x-y.v I.x.u R.x-y.uv (combine, delete I) R.x-y.v I.i.u | i not in x-y leave alone, nonoverlapping

I.i.u = insert u before op @ index i R.x-y.u = replace x-y indexed tokens with u

First we need to examine replaces. For any replace op:

1. wipe out any insertions before op within that range. 2. Drop any replace op before that is contained completely within that range. 3. Throw exception upon boundary overlap with any previous replace.

Then we can deal with inserts:

1. for any inserts to same index, combine even if not adjacent. 2. for any prior replace with same left boundary, combine this insert with replace and delete this replace. 3. throw exception if index in same range as previous replace

Don't actually delete; make op null in list. Easier to walk list. Later we can throw as we add to index -> op map.

Note that I.2 R.2-2 will wipe out I.2 even though, technically, the inserted stuff would be before the replace range. But, if you add tokens in front of a method body '{' and then delete the method body, I think the stuff before the '{' you added should disappear too.

Return a map from token index to operation.

| def antlr3.TokenRewriteStream.getKindOfOps | ( | self, | ||

| rewrites, | ||||

| kind, | ||||

before = None | ||||

| ) |

| def antlr3.TokenRewriteStream.toDebugString | ( | self, | ||

start = None, |

||||

end = None | ||||

| ) |

Member Data Documentation

string antlr3.TokenRewriteStream.DEFAULT_PROGRAM_NAME = "default" [static] |

int antlr3.TokenRewriteStream.MIN_TOKEN_INDEX = 0 [static] |

antlr3.TokenRewriteStream.__str__ = toString [static, private] |

The documentation for this class was generated from the following file:

1.5.5

1.5.5